最近网站访问非常慢,cpu 占用非常高,服务器负载整体也非常高,打开日志发现有很多不知名的蜘蛛一直在爬行我的站点,根据经验肯定是这里的问题,于是根据我的情况写了规则做了屏蔽,屏蔽后负载降下来了,下面整理下 iis 及 nginx 及 apache 环境下如何屏蔽不知名的蜘蛛 ua。

注意(请根据自己的情况调整删除或增加 ua 信息,我提供的规则中包含了不常用的蜘蛛 ua,几乎用不着,若您的网站比较特殊,需要不同的蜘蛛爬取,建议仔细分析规则,将指定 ua 删除即可)

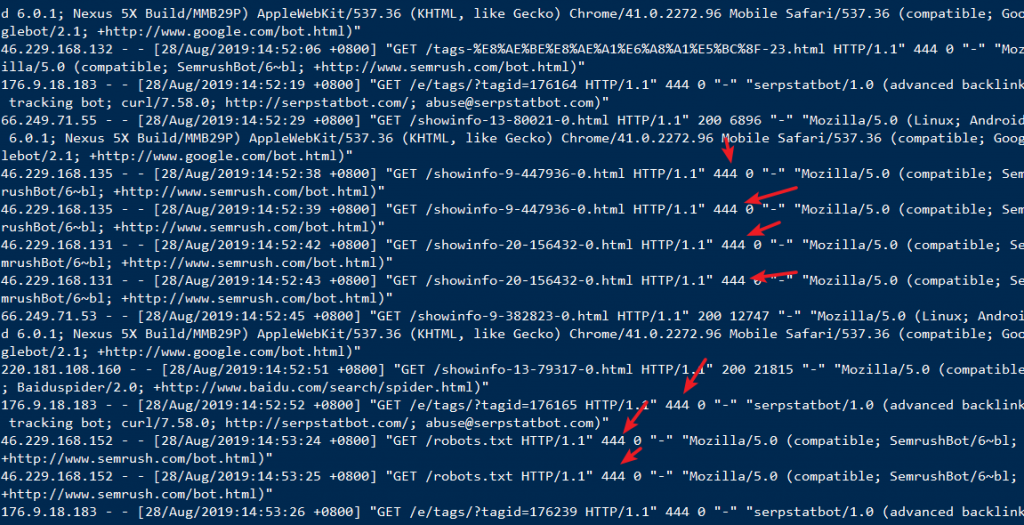

屏蔽后的效果

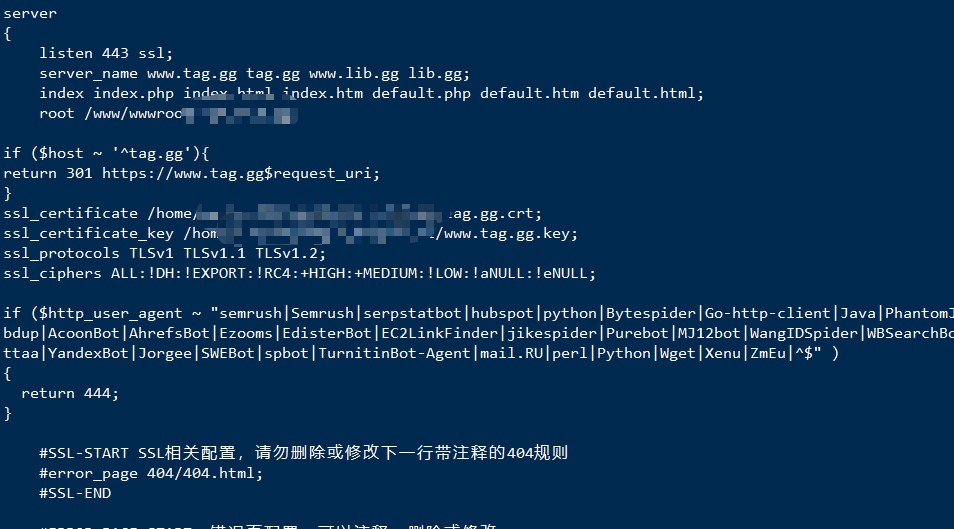

1、我网站是 nginx,下面是我的屏蔽规则,将规则添加到配置文件的 server 段里面,当这些蜘蛛来抓取时会返回 444;

if ($http_user_agent ~ "MegaIndex|MegaIndex.ru|BLEXBot|Qwantify|qwantify|semrush|Semrush|serpstatbot|hubspot|python|Bytespider|Go-http-client|Java|PhantomJS|SemrushBot|Scrapy|Webdup|AcoonBot|AhrefsBot|Ezooms|EdisterBot|EC2LinkFinder|jikespider|Purebot|MJ12bot|WangIDSpider|WBSearchBot|Wotbox|xbfMozilla|Yottaa|YandexBot|Jorgee|SWEBot|spbot|TurnitinBot-Agent|mail.RU|perl|Python|Wget|Xenu|ZmEu|^$" )

{

return 444;

}2、IIS7/IIS8/IIS10 及以上 web 服务请在网站根目录下创建 web.config 文件,并写入如下代码即可;

<?xml version="1.0" encoding="UTF-8"?>

<configuration>

<system.webServer>

<rewrite>

<rules>

<rule name="Block spider">

<match url="(^robots.txt$)" ignoreCase="false" negate="true" />

<conditions>

<add input="{HTTP_USER_AGENT}" pattern="MegaIndex|MegaIndex.ru|BLEXBot|Qwantify|qwantify|semrush|Semrush|serpstatbot|hubspot|python|Bytespider|Go-http-client|Java|PhantomJS|SemrushBot|Scrapy|Webdup|AcoonBot|AhrefsBot|Ezooms|EdisterBot|EC2LinkFinder|jikespider|Purebot|MJ12bot|WangIDSpider|WBSearchBot|Wotbox|xbfMozilla|Yottaa|YandexBot|Jorgee|SWEBot|spbot|TurnitinBot-Agent|mail.RU|perl|Python|Wget|Xenu|ZmEu|^$"

ignoreCase="true" />

</conditions>

<action type="AbortRequest" />

</rule>

</rules>

</rewrite>

</system.webServer>

</configuration>3、IIS6 请在 isapi 重写组件中添加规则

#Block spider

RewriteCond %{HTTP_USER_AGENT} (MegaIndex|MegaIndex.ru|BLEXBot|Qwantify|qwantify|semrush|Semrush|serpstatbot|hubspot|python|Bytespider|Go-http-client|Java|PhantomJS|SemrushBot|Scrapy|Webdup|AcoonBot|AhrefsBot|Ezooms|EdisterBot|EC2LinkFinder|jikespider|Purebot|MJ12bot|WangIDSpider|WBSearchBot|Wotbox|xbfMozilla|Yottaa|YandexBot|Jorgee|SWEBot|spbot|TurnitinBot-Agent|mail.RU|perl|Python|Wget|Xenu|ZmEu|^$) [NC]

RewriteRule !(^/robots.txt$) - [F]4、apache 请在.htaccess 文件中添加如下规则即可:

<IfModule mod_rewrite.c>

RewriteEngine On

#Block spider

RewriteCond %{HTTP_USER_AGENT} "MegaIndex|MegaIndex.ru|BLEXBot|Qwantify|qwantify|semrush|Semrush|serpstatbot|hubspot|python|Bytespider|Go-http-client|Java|PhantomJS|SemrushBot|Scrapy|Webdup|AcoonBot|AhrefsBot|Ezooms|EdisterBot|EC2LinkFinder|jikespider|Purebot|MJ12bot|WangIDSpider|WBSearchBot|Wotbox|xbfMozilla|Yottaa|YandexBot|Jorgee|SWEBot|spbot|TurnitinBot-Agent|mail.RU|perl|Python|Wget|Xenu|ZmEu|^$" [NC]

RewriteRule !(^robots\.txt$) - [F]

</IfModule>

来源:https://blog.tag.gg/showinfo-36-35757-0.html

© 版权声明

博主的文章没有高度、深度和广度,只是凑字数。利用读书、参考、引用、抄袭、复制和粘贴等多种方式打造成自己的纯镀 24k 文章!如若有侵权,请联系博主删除。

喜欢就点个赞吧

![【学习强国】[挑战答题]带选项完整题库(2020年4月20日更新)-武穆逸仙](https://www.iwmyx.cn/wp-content/uploads/2019/12/timg-300x200.jpg)

![【学习强国】[新闻采编学习(记者证)]带选项完整题库(2019年11月1日更新)-武穆逸仙](https://www.iwmyx.cn/wp-content/uploads/2019/12/77ed36f4b18679ce54d4cebda306117e-300x200.jpg)